Table of Contents

Authority scales when expertise comes first

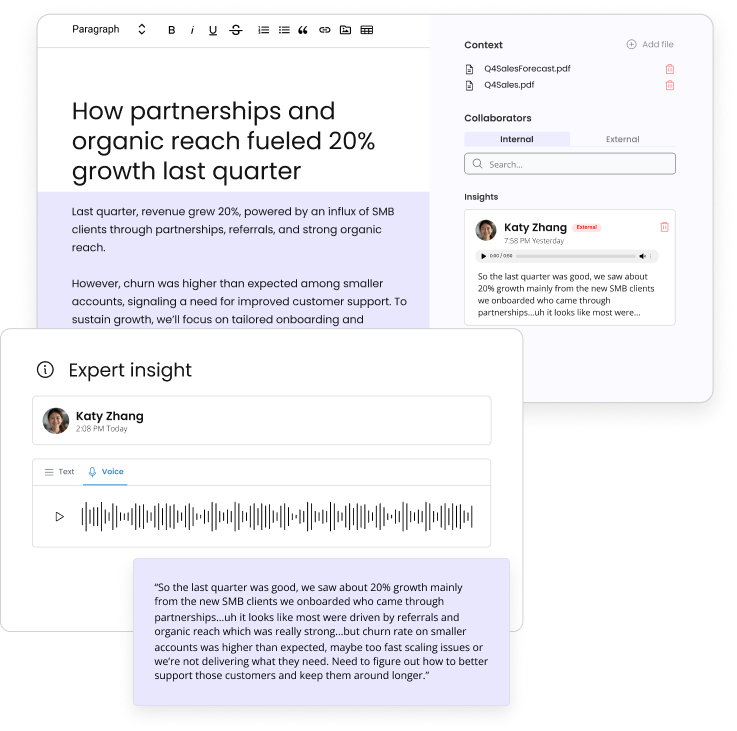

Wordbrew helps teams collect expert insight before AI ever writes a word.

Built for expert-led, review-safe content

- Home

- »

- Content Marketing

- »

- 12 Smart Ways Marketers Can Win with LLM SEO

-

Wordbrew

- 14 minutes read time

12 Smart Ways Marketers Can Win with LLM SEO

- Home

- »

- Content Marketing

- »

- 12 Smart Ways Marketers Can Win with LLM SEO

12 Smart Ways Marketers Can Win with LLM SEO

Table of Contents

The search game has changed before. We’ve optimized for Panda, Penguin, BERT, Helpful Content, and a long list of Google’s ever-changing whims, but large language models (LLMs) are different. LLMs are a fundamental shift in how people are discovering and actively searching for information.

Already, over 71% of people have used generative search tools (like ChatGPT) over traditional search engines—and 14% of people do so daily. And as of June 2025, nearly 60% of Google searches ended in zero clicks.

SEO didn’t die. It split.

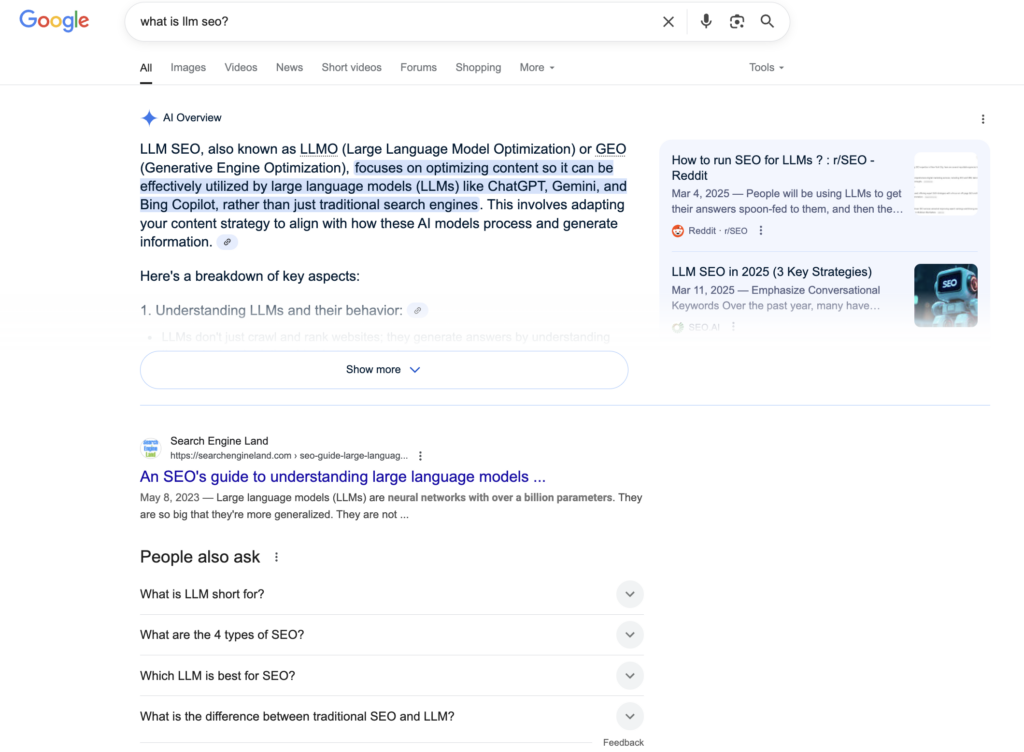

Increasingly, Google’s AI engine will answer the user’s question directly on the search engine results page. The answer appears at the very top of the page, usurping even paid ads for top billing.

This, however, could mean good news for marketers. It may mean your content can quickly inform the user, eliminating the need for them to visit your website to convert.

But when writing for AI, there’s more than Google Search at play. AI involves a stream of LLM models, like ChatGPT, Claude, Perplexity, and Copilot, to discover and then point users to our answers.

At Wordbrew, we’ve talked to nearly three dozen marketers, SEO experts, product teams, and data engineers to make sense of what AI means for traditional user discovery by search. Many teams are embracing the evolution (and quietly killing it with LLM SEO) while others are cautious about diving in.

This article captures 12 of the clearest lessons emerging from our conversations with marketers succeeding at SEO in the age of AI, and what it takes to push these large language models to their limits.

This is a living, breathing guide that we’ll continually update as new capabilities and insights emerge. Whether you’re AI-curious or AI-weary (or both), this read gives you a pulse on what’s coming next.

What is LLM SEO (and how is it different from the SEO you know)?

LLM (Large Language Model) SEO is the practice of optimizing content so that large language models like ChatGPT (powered by OpenAI), Claude, Copilot, Perplexity, Gemini, and Google’s generative results can find, interpret, and surface it as an answer.

Here’s an example of AI showing up in Google Search. An “AI Overview” for the search query sits atop the page, outranking paid ads and “People Also Ask.” To the right, you can see the sources Google pulled from.

Instead of trying to rank in a list, the goal is to become the answer itself.

While traditional SEO revolves around search engines matching user queries to keywords on web pages, LLM SEO focuses on how models retrieve and assemble answers.

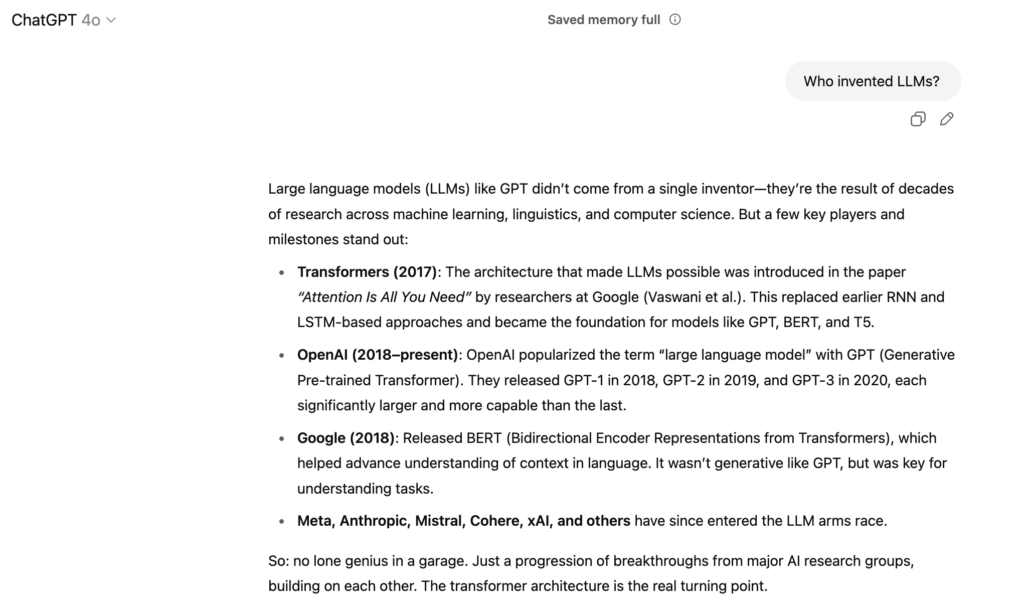

When you ask ChatGPT a question, it will search the web and then provide a definitive answer pulling from multiple sources. With some queries, ChatGPT will offer inline citations (URLs to information sourced) in its answer.

As a result, the KPIs that matter are shifting. Traditional metrics like CTR and SERP position still matter, but marketers must also track AI referral, AI model citations, and overall retrieval visibility.

Here’s a look at the differences and similarities between SEO and LLM SEO:

| Traditional SEO | LLM SEO | Both |

| Keyword density | Natural language prompts | Clear site structure |

| SERP rankings | Visibility in RAG Indexes (RAG = Retrieval-Augmented Generation) | Fast, indexable pages |

| Backlinks | Community citations (GitHub, Reddit) | Fresh, updated content |

| Meta descriptions | Snippet-worthy explanations | Schema markup |

| CTR optimization | Semantic clarity | Internal linking |

SEO still matters, but AI matters too. And with AI, clarity and community trust matter more than keyword density and page rankings. Your well-structured article that’s buried on page three of Google may still be served in a Copilot (AI) response.

1. Own a concept clearly and deeply

LLMs don’t reward articles crammed with dozens of fuzzy keywords, yet this is what many SEO writing platforms help users do. They guide writers on how to strategically cram in as many keyword synonyms as possible, and with “Google-perfect” frequency.”

LLMs, however, reward clarity. When a model retrieves information, it’s not looking for the most backlinks and keyword variations. It’s looking for the clearest explanation of a concept; preferably one that can stand on its own answering the user’s question.

Patrycja Severs, an SEO and AEO specialist at scandiweb, has seen this firsthand. AI content that performs best for businesses is “deeply informative” and goes beyond “just surface-level summaries.” Other elements also come into play, but at the crux, LLM SEO is all “making sense quickly.”

LLMs retrieve answers from authoritative content, even if it doesn’t contain a single user keyword. These models understand context, and they know whether or not it answers the query at hand.

Thus, keyword stuffing and straight SEO mimicry won’t work in LLM search. These models are looking for semantic proximity, concept ownership, and language precision.

If your content explains something better, earlier, or more definitively than anyone else, you have a shot at becoming the default reference. If your content is vague, surface-level, or derivative (it sounds like the same dribble everyone else is writing), your website will get skipped over.

How to optimize for depth and clarity

Instead of optimizing for keyword volume, optimize for authority, which means:

- Using consistent, precise terminology and avoiding fuzzy keywords.

- Including real data, quotes, diagrams, or original frameworks.

- Anticipating and answering the unasked follow-up questions clearly and naturally. (Go deeper than Google’s “People Also Ask.”)

- Writing answers that can stand alone. The rest of the article is not needed to give context. Readers can derive a clear understanding from a brief snippet alone.

- Crafting content that performs well in zero-authority systems

Aim for zero-trust authority

Zero-authority content refers to a design approach where no one single answer (from a single source) is inherently trusted. LLMs consider answers from multiple trustworthy sources to arrive at a general understanding of truth.

This means including authoritative outbound links (to external sites), citations, and unique expert insights in your content, proving to the model that your content is credible.

There are several reasons for this zero-authority approach:

- It combats hallucinations and misinformation.

- It turns your content from “He said, she said, AI said” into “Here’s the facts from a real, checkable source.”

- It signals E-E-A-T (Experience, Expertise, Authoritativeness, Trust), something Google and gen AI models value deeply especially for categories like YMYL (Your Money, Your Life), health, finance, and law.

- It’s part of RAG (Retrieval-Augmented Generation) best practices for fetching documents from large databases. Embedding citation links let the reader follow up for more information as part of a “retrieval” and “grounding” loop.

- Google Search Quality Evaluator Guidelines plainly state that high-quality content should “cite authoritative sources” and “show evidence of accuracy.”

- OpenAI’s own documentation on function calling in enterprise ChatGPT solutions encourages tying LLM output to retrieved, reliable sources.

To own a concept with depth and clarity, make sure your content is original, helpful, and not easily replicated by competitors. Copycat content is everywhere, and you cannot stand out by emulating the competition.

2. Structure life your life depends on it

Structure matters far more than style and clever hooks. AI models don’t read your content in the traditional sense; they parse, chunk, and label. If your content isn’t clearly segmented or semantically wrapped, it might as well be invisible.

For this content structure, Semantic HTML is critical.

According to W3C’s HTML semantics guidelines, semantic tags “clearly describe their meaning to both the browser and the developer,” enabling more accurate parsing and accessibility.

Tags like <article>, <section>, <header>, and <blockquote> tell models what each piece of content is. Search engines and AI rely on this structure to understand your content.

Google reinforces this in its developer documentation documentation style guide, stating that using semantic HTML helps crawlers and AI systems understand what content is important, where it belongs, and how it should be indexed or presented in rich results.

Here’s what works best:

- Use a proper heading hierarchy (<h1> > <h2> > <h3>)

- Wrap sections in semantic tags (<section>, <article>, <aside>)

- Define lists semantically with <ol>, <ul>, and <dl>

- Avoid stuffing content in generic <div> tags without structure—use semantic containers like <section>, <article>, and clearly scoped <li> tags within lists.

- Format answers for retrieval using <blockquote>, <pre>, <code>, and tables where appropriate

- Add structured data with Schema.org or JSON-LD for FAQs, how-tos, products, and more

Make it obvious what each section is, why it exists, and what a model (or a human reader) can take from it. The clearer your content structure, the easier it is for generative engines to extract your expertise.

Emily Demirdonder, Director of Operations and Marketing at Proximity Plumbing, has focused on clear structuring to turn Proximity’s underperforming pages into revenue drivers.

“We’ve been using AI to flag thin or duplicate content and retrain it with our tone and formatting rules,” she says. Pages with fewer than 200 words that were previously on page two of search results are now structured and updated, leading to a 28% jump in conversions over three months.

“The shift has freed up budget we used to waste on guesswork,” says Demirdonder. “We’re spending on visibility that drives booked jobs.”

LLM SEO Structure Cheat Sheet

| Tag / Structure | When & How to Use It |

<article> | Wrap full, standalone content like blog posts, whitepapers, or guides. |

<section> | Use to group related content under its own heading. Helps both humans and AI understand topical divisions. |

<header> / <footer> | Use at the start/end of each section to frame the content (intro, summary, CTA). Not just for site-wide layout. |

<aside> | Use for side notes, pull quotes, related tips, or supplemental info that isn’t core to the main topic. |

<h1> | Main page title—use only once per page. |

<h2> / <h3> (etc.) | Use to structure subheadings. Maintain logical hierarchy (no skipping levels). |

<ul> / <ol> + <li> | Use for lists—bullets for unordered ideas, numbers for ordered steps or rankings. Keep list items short and clear. |

<dl> + <dt> / <dd> | Use for FAQs, glossaries, or any term-definition or question-answer pair. |

<blockquote> | Use for long quotes. Always cite the source when possible. |

<code> / <pre> | Use for technical content, like command-line instructions or programming examples. Preserves spacing and formatting. |

<table> | Use for real tabular data—include <thead>, <th>, <tr>, and <td>. Avoid using tables for layout. |

| Structured Data | Use JSON-LD with Schema.org to mark up FAQs, how-tos, articles, etc. Embed via <script type="application/ld+json">. |

| Pro Tips | – Use clear, self-contained sections. – One idea per block. – Avoid dumping insights in generic <div>s. – Make content copy/paste-friendly. |

Download this cheat sheet to help structure your content with semantic HTML so AI models can find and extract it.

3. Write with retrieval in mind (Do not bury the lead)

LLMs don’t scan entire pages looking for topical relevance. They pull out chunks (paragraphs, definitions, snippets, or call-outs) that best answer the user’s AI prompt or search query.

So if your answer is buried three scrolls deep in a 3,000-word blog post, it’s unlikely to ever see the light of AI.

To show up in retrieval-augmented generation (RAG) systems like those powering ChatGPT, you need to write in standalone blocks. Think of each section of content as its own source: Could this part of the page be surfaced alone and still make sense?

This is where a modular content strategy becomes crucial:

- Focus each H2 or H3 around a single, searchable question or concept.

- Use introductory sentences that establish context without needing to backtrack.

- Avoid long-winded transitions between ideas; prioritize chunk overflow.

- Include takeaways and mini-summaries that models can extract without editing.

Don’t make the AI model do mental gymnastics. Retrieval systems will always prefer straightforward, clearly framed, obviously relevant content that is semantically scoped to the user’s query.

4. Keep content fast and indexable

If your content isn’t crawlable, it might as well not exist, and marketers need to understand what LLMs can crawl and read.

Most LLMs and RAG systems rely on static HTML to interpret pages. They don’t wait for client-side hydration, where a web page loads basic HTML first and then the JavaScript “hydrates” it. AI models read raw HTML and then move on.

Content should not be buried or locked behind click buttons, expand accordions, modal interactions, or slow- or lazy-load scripts. LLms won’t scroll ahead waiting for the rest of your page to load; they’ll simply skip over your site.

Tips to keep your content fast, indexable, and accessible:

- Use server-side rendering (SSR), static site generation (SSG), or incremental static regeneration (ISR).

- Validate that key pages are crawlable by Google Search Console and Bing Webmaster Tools.

- Minimize JavaScript and use semantic HTML wherever possible.

- Keep your sitemap clean and updated.

- Prioritize performance and Core Web Vitals.

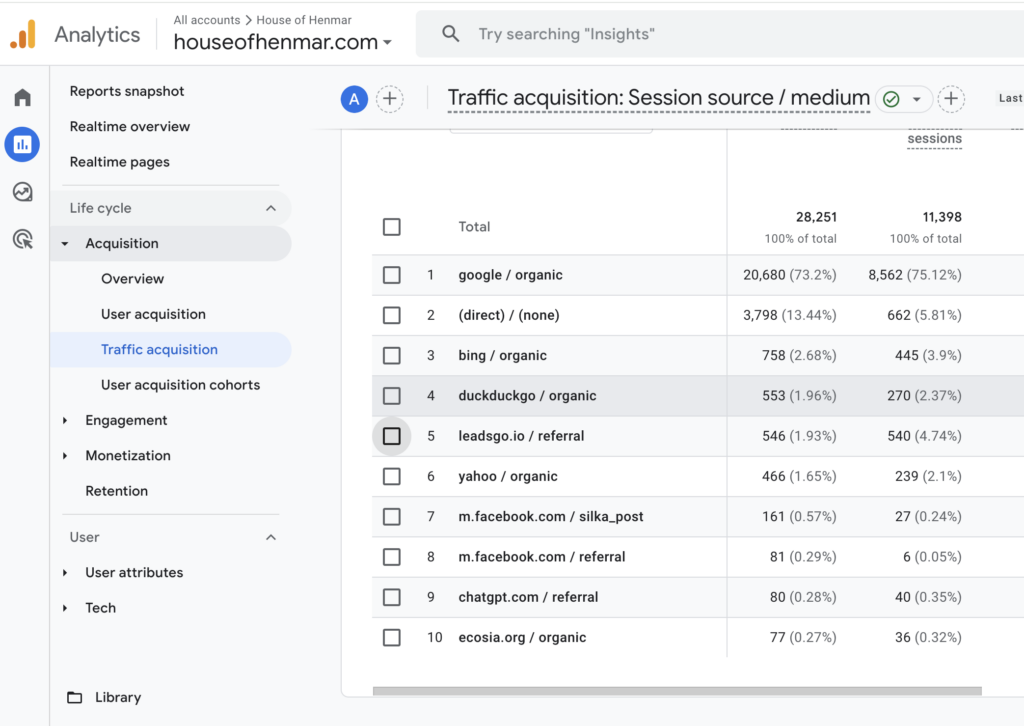

Here’s a look at Google Analytics traffic acquisition dashboard. It offers insights on search traffic for SEO, AI engines, and other platforms.

This might sound like traditional SEO advice, because many of those principles also apply to LLM SEO. But instead of just ranking poorly in search, with LLM SEO your information won’t surface at all.

5. Stay fresh

Content has a half-life. Even if it’s indexed and well-structured, models eventually deprioritize outdated information Systems like Perplexity or Google’s AI Overviews prioritize recency and accuracy.

Freshness is more about maintaining what’s already working than pushing out new blog posts. If you wrote a helpful article explaining ERPs that was last updated in 2021, but a competitor just refreshed their post yesterday, LLMs will almost certainly retrieve their content over yours.

Here’s how smart teams keep content relevant:

- Add a “last updated” timestamp that actually updates.

- Use change logs or update notes in product documentation

- Regularly review top-performing content (i.e., every 30, 60, or 90 days) and refresh where needed.

- Remove or redirect outdated pages to avoid model confusion

- Revisit semantic drift. Language evolves, and so should your syntax and phrasing.

When a top-performing post is deprioritized, it’s often a matter of good content gone stale.

And it’s not just blog articles. Scandiweb’s Patryjca Severs says one client saw a 100% increase in ChatGPT and Perplexity traffic after updating their client’s Google Business Profile. They updated the listing with clear language aligned with ready-to-buy customers, increasing leads and conversions.

6. Answer real prompts before they’re asked

Most LLM interactions begin with a prompt like “How do I…?” or “What’s the difference between…?”

Yet few marketers write with that format in mind.

Prompt-shaped content performs better in generative search because it mirrors how humans use language.

Vibin Babuurajan, a co-founder at Epic Slope Partners, says this approach gives solid results. He has recommended “conversion-focused topics” to clients who are “instantly seeing increases in conversion from LLM SEO channels.”

Here’s what helps:

- Use question-based subheads that mimic search language. Use headers like “Tool A vs Tool B,” “Alternatives to [competitor],” and “How to choose the right [category].”

- Write FAQ-style content that sounds natural. Avoid obviously leading, unnatural questions. (i.e. “Why is [my company] the best?”)

- Incorporate micro-summaries in answer form (“RAG stands for retrieval-augmented generation, a technique used by LLMs to…”)

- Think like a prompt engineer: What would you type to get this answer?

Models don’t match exact strings; they infer meaning. The closer your content gets to answering a real-world question, the higher your odds of being retrieved.

7. Earn citations where it counts

LLMs echo the open web. When real people cite your content on platforms like GitHub, Reddit, Hacker News, or Stack Overflow, those mentions can influence both training data and retrieval relevance.

When users trust your content enough to cite it in a group or link to it in a README, models treat the shared link as a high-value upvote.

You can earn citations by publishing content worth referencing, and then helping it reach the right communities. (Hint: Don’t make interns, or Fiverr freelancers, spam platforms.)

In this Hacker News thread, the discussion centers on data in an external link.

Start here:

- Share code samples, benchmarks, or open data others can use.

- Participate in discussions where your content is relevant, not promotional.

- Make your answers quotable: one paragraph, one point, clearly explained.

- Optimize posts for easy copy-paste (short snippets, summaries, visuals).

- Get mentioned in changelogs, GitHub issues, or “helpful links” lists.

You don’t need hundreds of backlinks. You need a few influential citations in high-signal places.

8. Break the big post into clusters

The 3,000-word all-in-one SEO article isn’t dead, but they may overwhelm LLM models

Large blocks of text covering lots of diverse ground confuse retrieval systems. They’re harder to chunk and parse, and often too broad to surface for any one query.

Instead, content that’s broken into thematic clusters (short, focused pages that interlink toward related articles) often performs better.

This is where the topic cluster model (long used in SEO) becomes even more valuable. It increases the odds that something you’ve written will align cleanly with a specific LLM prompt, even if it lacks in traditional crawl depth.

Tactical shifts for this approach include:

- Breaking complex topics into 500–800 word subpages.

- Interlinking pages using anchor text that reflects real search queries.

- Using breadcrumb navigation or hierarchical URLs (e.g. /llm-seo/structure).

- Making each page its own “answerable unit.”

Clarity and specificity win. It’s better to own ten small ideas well than to bury them all in one mega-post the model can’t lift from.

Tip: Writing long guides (like best practices, case studies and 40-page business reports) still has merit, but be clear about who you want the end reader to be.

9. Treat non-text content like text

LLMs parse and pull insights from alt text, transcripts, video captions, and code annotations. This sort of multimodal retrieval is taking off.

ChatGPT can quote from podcast transcripts, Perplexity can cite or link to YouTube videos, and Copilot can reference GitHub READMEs. If your content exists in multiple formats, each version needs to be structured and readable for AI models.

Here’s what to optimize:

- Write clear, descriptive alt text for images (don’t keyword stuff; accurately describe the image).

- Use video captions and upload transcripts.

- Include code comments that explain the why, not just the what.

- Apply schema like VideoObject, HowTo, or FAQPage when applicable.

- Treat documentation and support articles as real content, not just help-center debris.

Models are smart enough to reply to user’s text-based queries with tables, graphs, videos, and social clips, malking non-text assets critical.

The better you structure all of your content, the more opportunities you have to be featured in an AI response.

10. Make it human-rated

User feedback matters. In tools like ChatGPT and Claude, users can thumbs-up or flag an answer. Over time, these feedback loops help models refine which sources they trust.

In this response to my ChatGPT query, I’m given the option to thumbs-up or thumbs-down its response.

Even if your company’s name is not directly mentioned, user feedback on your content’s helpfulness still matters.

You can influence human ratings by:

- Making your content easy to understand and share.

- Writing in a tone that’s helpful, not salesy or evasive.

- Providing clear, up-to-date, well-sourced information that doesn’t require clicking dozens of links to verify.

- Encouraging readers to engage with your content on platforms where it gets picked up.

Sasha Berson, Chief Growth Officer at Grow Law, says these human feedback loops are already producing results.

“We’re beginning to observe measurable traffic and lead activity influenced by platforms like ChatGPT, Perplexity, and especially Google’s AI Overviews,” Berson says.

In a review of 1,200 business-related queries across 11 cities, law firms cited in AI Overviews saw a 34% increase in consultations.

“These [AI] platforms tend to recommend a small number of sources,” says Benson, thus earning citations is becoming increasingly important.

11. Think like a model, not a marketer

LLMs don’t get the punchline. They want clear language, not clever wording. Models understand consistent patterns, well-defined concepts, and straightforward language that aligns with how people speak.

Here, marketers often get in their own way. The instinct to differentiate through language, like inventing a new name for something old. You might be more unique—but you’ll also be completely unfindable to LLMs.

Tips for alignment with LLM models:

- Use plain language over brand language.

- Reinforce key concepts consistently across pages.

- Avoid mixing terminology unless you’re explicitly drawing a contrast.

- Define new or proprietary terms clearly and repeatedly.

- Focus on coherence over novelty.

Jamilyn Trainor, Senior Project Manager at Müller Expo Services, has seen the benefits of this approach firsthand. After restructuring their content to front-load value proposition and align with how AI models understand content, their Modular Booth Design page saw a 25% increase in qualified inbound leads and a 20% reduction in sales cycle length.

“We flipped our convention of burying value at the bottom of the page,” she says. “Now, people find us faster and they’re a better fit.”

12. Play it clean and use open signals whenever possible

As copyright scrutiny around AI increases, there’s speculation that models will begin to prefer clearly licensed or explicitly open-source content.

This is especially relevant with private RAG systems which need clean, legally safe sources of information. If your content carries a permissive license or is clearly marked as reusable, you may end up being included where competitors aren’t.

Forward-looking companies are already experimenting by:

- Applying Creative Commons licenses to select content hubs or documentation.

- Publishing open datasets or frameworks others can cite and reuse.

- Making terms of use explicit and easy for crawlers to find.

- Indicating licensing in metadata, GitHub repos, or schema markup.

This won’t guarantee inclusion, but openness helps. Epic Slope’s Vibin Babuurajan says one of their SaaS clients saw a 300% increase in their ChatGPT referral traffic after aligning their content with retrieval-friendly signals.

What it takes to win and keep winning at LLM SEO

Brands winning at content discoverability today are optimizing for search engines and large language models. These marketers are creating structured content that clearly answers questions in user-preferred language, offering unique perspectives and expert insights that competitors can’t easily replicate.

This shift in how discoverability works is a win for consumers and human marketers, as it rewards clarity and genuine authority and not generic, keyword-stuffed bloat.

Platforms built for human-AI collaboration, like Wordbrew, are helping marketers produce high-value content faster.

Google is rewarding human-AI hybrid content. With its recent algorithm update, an estimated 86% of high-ranking pages contained some AI. But fully AI-generated pages, with no human editing or oversight, rarely ranked in the top spot. Human involvement matters, with top pages using a mix of AI and human guidance.

If you’re looking to adapt your human-led strategy for AI-first search, Wordbrew can help. Find out how we make sure your answers are the ones that AI models and customers read and keep coming back to.